Are “Flat-Line” Outcomes the Kiss of Death? How to Use a Registry for Outcomes Improvement Research

Despite a huge investment in health care, we have yet to demonstrate real progress in improving outcomes. A major study of patient outcomes last year revealed disappointing “flat-line” results for patient-centered medical home services, which means no difference in outcomes over time, regardless of significant expenditures. And that’s just the beginning.

Despite a huge investment in health care, we have yet to demonstrate real progress in improving outcomes. A major study of patient outcomes last year revealed disappointing “flat-line” results for patient-centered medical home services, which means no difference in outcomes over time, regardless of significant expenditures. And that’s just the beginning.

Assessments of cancer outcomes, preventive screenings and chronic disease indicators show similar, disappointing results. It’s hard to accept that we have failed to improve mortality or morbidity in a way that can be attributed to medical management and treatment, rather than to lifestyle and nutrition. In most cases, however, that’s where we are.

PQRS Measures and Other Benchmarks Are Only the Starting Point for Assessment

So how do you know whether you have made any difference at all in patient health? Do outcomes that beat the standard in one practice prove that the practice has done a better job with patients than another with poorer outcomes? No, and here’s why:

If you have embarked on a quality program, you are likely focusing your review of data on benchmarks, or patient results compared to a standard or measure. Measurement of outcomes compared to a norm is the method that Medicare, commercial health plans and virtually all organizations use to measure quality. But this kind of measurement—capturing outcomes for one point in time—hides flat-line results.

Data based on single benchmarks may appear to differentiate results; but this is deceptive. The reality is that some practice populations are going to have different outcomes than others based on factors outside of medical management, such as socioeconomic status or extent of disease. And many organizations stop changing, focusing only on the providers outside the “norm.”

Tracking Outcomes Over Time Provides a Mechanism to Review Change and Test Interventions

By contrast, using Registry data and tools to plot single variable outcome measures over time—for a patient, a provider, a group of providers and a system of care—will reveal a truer and more actionable picture of performance. A single snapshot of an outcome measure at one point in time provides little insight for improving a practice. But measures presented over time indicate trends, help identify base-line prevalence of important measures for a practice and provide the grist for improvement.

Time-based reports are like stock charts that monitor our markets; instead of markets, we track medical care outcomes. These outcomes may be process measures, quality of care measures defined by CMS or clinical outcomes of care, such as utilization of services or functional health. We’re looking for the delta, or change.

Flat-line Outcomes Should Prompt Questions, Not Blame

Registry experience has taught us that many of the PQRS measures required by CMS will track in a mostly flat line, across all measures provided by the practice. This is interesting. A single flat-line outcome measure raises questions that are more about patients; a cluster of flat line measures for a practice raise questions about that practice. There is an old admonition that states that a system is designed to get the exact output being produced. If you base your system on benchmarks, you will not achieve the improvement you need. A trended outcome view establishes the base line for your practice’s performance and sets the stage for proving that you can do better, given a stable population of patients.

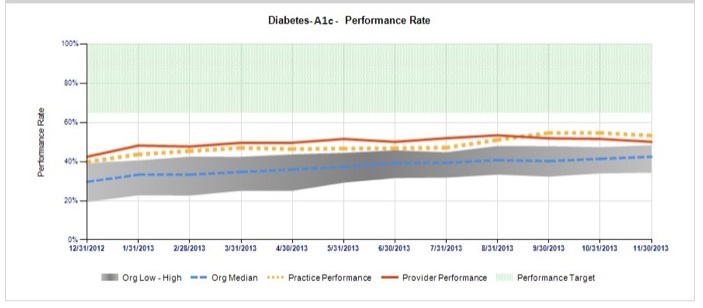

Look at the sample chart that measures a practice’s A1c levels for the population of its diabetic patients, below. The line shows that the average A1c level varies little over time; marking the average every month produces a flat line. In fact, even the distribution of values varies little. There could be several reasons for this. For example, there may be just a few patients providing many A1c levels so that the flat line is due to lack of variation in the types of patients coming to the practice. Or, the patients being measured may be new to the practice, and each patient is only providing a single A1c measure. A tracked outcome that is flat does not tell us all there is to know about the practice, but it makes us begin to wonder.

Registries Facilitate Outcome Improvement Through Research

A stable measure over time provides the base-line estimate to allow for accurate research planning. As an editor of a medical journal, I review many papers that purport to show how one treatment plan is better than the usual based on a change in a base-line outcome measure of the usual treatment. Often, however, the planned base-line measure turns out to be wrong, invalidating the study findings, because the researchers do not have access to the base-line measures over time for the patients being entered in a clinical trial. Tracked measures over time for an entire practice will establish better estimates of base-line performance so that improvement can be reliably studied. This is an advantage of doing research through a Registry that measures more than outcomes against benchmarks and collects all practice data.

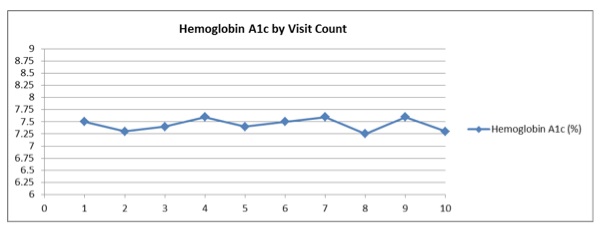

Tracking many single outcome measures over time is a start; it provides one view of a practice. But making inferences about relationships, testing interventions based on those inferences, and assessing results over time are required to improve patient outcomes. For example, the A1c levels for a practice can be related to the number of visits yearly for patients with diabetes. A practice would hope that as the number of visits goes up, the A1c level would decline. This would show that, perhaps, the visit is a valuable service. But a flat line of performance when measuring the number of visits should raise concerns about the value of the visits and would require additional research into why no valued association occurs.

The figure below depicts the relationship between A1c levels (Y-axis) and number of visits (X-axis) for a hypothetical practice. As the number of visits goes from 1 to 10, the average A1c value does not vary. One could infer that some providers see patients too many times to make a difference in outcomes, or that what occurs in a visit does little to alter a patient’s A1c level. A flat line between two variables is a canary in the mineshaft, signaling the need for systemic improvement.

Like stock charts, we all want better, higher performance and depicting outcome measures over time will help us get to better and more effective results. It’s important to note that measuring outcomes over time, in and of itself, does not define causality between variables. But this approach to outcomes assessment opens the door to asking better questions about performance, which in turn suggests research protocols to test interventions and refine treatment modalities. Registries provide a mechanism for collecting and displaying outcomes over time, testing interventions against outcomes and viewing multiple outcome associations.

And that kind of proven outcomes research is the only way we’re going to discover treatments that actually work to improve patient health.

Founded in 2002, ICLOPS has pioneered data registry solutions for improving population health. Our Population Health with Grand Rounds Solution engages providers in an investigative, educational process to achieve better outcomes. ICLOPS is a CMS Qualified Clinical Data Registry.

Contact ICLOPS for a Discovery Session.

Photo Credit: Space & Light via Compfight cc